New Internet that's 10,000 times faster

The internet could soon be made obsolete by "the grid". The lightning-fast replacement will be capable of downloading entire feature films within seconds. At speeds about 10,000 times faster than a typical broadband connection, the grid will be able to send the entire Rolling Stones back catalogue from Britain to Japan in less than two seconds. The latest spinoff from Cern, the particle physics centre that created the web, could also provide the kind of power needed to transmit holographic images; allow instant online gaming with hundreds of thousands of players, and offer high-definition video telephony for the price of a local call.

David Britton, professor of physics at Glasgow University and a leading figure in the grid project, believes grid technologies "could revolutionize society". "With this kind of computing power, future generations can collaborate and communicate in ways older people like me cannot even imagine," he said. The power of the grid will become apparent this summer after what scientists at Cern have termed their "red button" day - the switching on of the Large Hadron Collider, the new particle accelerator built to probe the origin of the universe. The grid will be activated at the same time to capture the data it generates. Cern, based near Geneva, started the grid computing project seven years ago when researchers realized LHC would generate annual data equivalent to 56m CDs - enough to make a stack 50 miles high. Ironically this meant that scientists at Cern - where Tim Berners-Lee invented the internet in 1989 - would no longer be able to use his creation for fear of causing a global collapse. This is because the internet has evolved by linking together a hotchpotch of cables and routing equipment, much of which was originally designed for telephone calls and which lacks the capacity for high-speed data transmission.

By contrast, the grid has been built with dedicated fibre optic cables and modern routing centers, meaning there are no outdated components to slow the deluge of data. The 55,000 servers already installed are expected to rise to 200,000 in two years.

While the web is a service for sharing information over the internet, the new system, Grid, is a service for sharing computer power and data storage capacity over the internet. It will allow online gaming with hundreds of thousands of players, and offer highdefinition video telephony for the price of a local call In search of new drugs against malaria, it analysed 140m compounds - a task that would have taken an internet-linked PC 420 years.

The proposed lightning fast replacement for the internet will consume a lot of power. Professor Tony Doyle, technical director of the grid project, said: "We need so much processing power there would even be an issue about getting enough electricity to run the computers... The only answer was a new network powerful enough to send the data instantly to research centers in other countries." That network, effectively a parallel internet, is now built, using dedicated fibre optic cables that run from Cern to 11 centers around the world, including those in the US, Canada, east Asia and Europe. From each centre, further connections radiate out to a host of other research institutions using existing high-speed academic networks.

window.google_render_ad(); Ian Bird, project leader for Cern's high-speed computing project, said grid technology would make the internet so fast that people would stop using desktop computers to store information and entrust it all to the internet. "It will lead to what's known as 'cloud computing' where people keep all their data online and access them from anywhere," he said. Computers on the grid can also transmit data at lightning speed, as well as receive them. This will allow researchers facing heavy processing tasks to call on the assistance of thousands of other computers around the world. The aim is to eliminate the dreaded "frozen screen" experienced by internet users who ask their machine to handle too much information.

The real aim of the grid is, however, to work with LHC in tracking down nature's most elusive particle, the Higgs boson. Predicted in theory but never yet found, the Higgs is supposed to be what gives matter mass. LHC has been designed to hunt out this particle - but even at its best will generate data on only a few thousand of the particles each year. It is such a large statistical task that the work will keep even the grid's huge capacity fully occupied for years to come. However, although the grid itself is unlikely to be directly available to domestic internet users, many telecoms providers and businesses are already introducing its pioneering technologies. One of the most potent is so-called dynamic switching, which creates a dedicated channel for internet users trying to download large volumes of data such as films. In theory, this would give a standard desktop computer the ability to download a movie in five seconds rather than the current three hours or so. Additionally, the grid is being made available to dozens of other academic researchers, including astronomers and molecular biologists. It has already been used to help design new drugs against malaria which kills one million people worldwide each year. Researchers used the grid to analyze 140m compounds - a task that would have taken a standard internet-linked PC 420 years. Source: SUNDAY TIMES, LONDON.

NANOTECHNOLOGY

Technology this made a great change in the human life now we are to the technology for the technology and by the technology

Nanotechnology is a highly multidisciplinary field, drawing from a number of fields such as applied physics, materials science, interface and colloid science, device physics, supramolecular chemistry (which refers to the area of chemistry that focuses on the noncovalent bonding interactions of molecules), self-replicating machines and robotics, chemical engineering, mechanical engineering, biological engineering, and electrical engineering. Grouping of the sciences under the umbrella of "nanotechnology" has been questioned on the basis that there is little actual boundary-crossing between the sciences that operate on the nano-scale. Instrumentation is the only area of technology common to all disciplines; on the contrary, for example pharmaceutical and semiconductor industries do not "talk with each other". Corporations that call their products "nanotechnology" typically market them only to a certain industrial cluster.[1]

Two main approaches are used in nanotechnology. In the "bottom-up" approach, materials and devices are built from molecular components which assemble themselves chemically by principles of molecular recognition. In the "top-down" approach, nano-objects are constructed from larger entities without atomic-level control. The impetus for nanotechnology comes from a renewed interest in Interface and Colloid Science, coupled with a new generation of analytical tools such as the atomic force microscope (AFM), and the scanning tunneling microscope (STM). Combined with refined processes such as electron beam lithography and molecular beam epitaxy, these instruments allow the deliberate manipulation of nanostructures, and lead to the observation of novel phenomena.

Examples of nanotechnology are the manufacture of polymers based on molecular structure, and the design of computer chip layouts based on surface science. Despite the promise of nanotechnologies such as quantum dots and nanotubes, real commercial applications have mainly used the advantages of colloidal nanoparticles in bulk form, such as suntan lotion, cosmetics, protective coatings, drug delivery,[2] and stain resistant clothing.

INTERNET

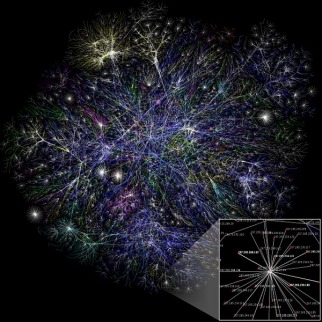

The Internet is a worldwide, publicly accessible series of interconnected computer networks that transmit data by packet switching using the standard Internet Protocol (IP). It is a "network of networks" that consists of millions of smaller domestic, academic, business, and government networks, which together carry various information and services, such as electronic mail, online chat, file transfer, and the interlinked web pages and other resources of the World Wide Web (WWW).

Creation,

The USSR's launch of Sputnik spurred the United States to create the Advanced Research Projects Agency, known as ARPA, in February 1958 to regain a technological lead.[1][2] ARPA created the Information Processing Technology Office (IPTO) to further the research of the Semi Automatic Ground Environment (SAGE) program, which had networked country-wide radar systems together for the first time. J. C. R. Licklider was selected to head the IPTO, and saw universal networking as a potential unifying human revolution.

Licklider moved from the Psycho-Acoustic Laboratory at Harvard University to MIT in 1950, after becoming interested in information technology. At MIT, he served on a committee that established Lincoln Laboratory and worked on the SAGE project. In 1957 he became a Vice President at BBN, where he bought the first production PDP-1 computer and conducted the first public demonstration of time-sharing.

At the IPTO, Licklider recruited Lawrence Roberts to head a project to implement a network, and Roberts based the technology on the work of Paul Baran,[citation needed] who had written an exhaustive study for the U.S. Air Force that recommended packet switching (as opposed to circuit switching) to make a network highly robust and survivable. After much work, the first two nodes of what would become the ARPANET were interconnected between UCLA and SRI International in Menlo Park, California, on October 29, 1969. The ARPANET was one of the "eve" networks of today's Internet. Following on from the demonstration that packet switching worked on the ARPANET, the British Post Office, Telenet, DATAPAC and TRANSPAC collaborated to create the first international packet-switched network service. In the UK, this was referred to as the International Packet Stream Service (IPSS), in 1978. The collection of X.25-based networks grew from Europe and the US to cover Canada, Hong Kong and Australia by 1981. The X.25 packet switching standard was developed in the CCITT (now called ITU-T) around 1976. X.25 was independent of the TCP/IP protocols that arose from the experimental work of DARPA on the ARPANET, Packet Radio Net and Packet Satellite Net during the same time period. Vinton Cerf and Robert Kahn developed the first description of the TCP protocols during 1973 and published a paper on the subject in May 1974. Use of the term "Internet" to describe a single global TCP/IP network originated in December 1974 with the publication of RFC 675, the first full specification of TCP that was written by Vinton Cerf, Yogen Dalal and Carl Sunshine, then at Stanford University. During the next nine years, work proceeded to refine the protocols and to implement them on a wide range of operating systems.

The first TCP/IP-wide area network was made operational by January 1, 1983 when all hosts on the ARPANET were switched over from the older NCP protocols to TCP/IP. In 1985, the United States' National Science Foundation (NSF) commissioned the construction of a university 56 kilobit/second network backbone using computers called "fuzzballs" by their inventor, David L. Mills. The following year, NSF sponsored the development of a higher-speed 1.5 megabit/second backbone that became the NSFNet. A key decision to use the DARPA TCP/IP protocols was made by Dennis Jennings, then in charge of the Supercomputer program at NSF.

The opening of the network to commercial interests began in 1988. The US Federal Networking Council approved the interconnection of the NSFNET to the commercial MCI Mail system in that year and the link was made in the summer of 1989. Other commercial electronic e-mail services were soon connected, including OnTyme, Telemail and Compuserve. In that same year, three commercial Internet service providers (ISP) were created: UUNET, PSINET and CERFNET. Important, separate networks that offered gateways into, then later merged with, the Internet include Usenet and BITNET. Various other commercial and educational networks, such as Telenet, Tymnet, Compuserve and JANET were interconnected with the growing Internet. Telenet (later called Sprintnet) was a large privately funded national computer network with free dial-up access in cities throughout the U.S. that had been in operation since the 1970s. This network was eventually interconnected with the others in the 1980s as the TCP/IP protocol became increasingly popular. The ability of TCP/IP to work over virtually any pre-existing communication networks allowed for a great ease of growth, although the rapid growth of the Internet was due primarily to the availability of commercial routers from companies such as Cisco Systems, Proteon and Juniper, the availability of commercial Ethernet equipment for local-area networking and the widespread implementation of TCP/IP on the UNIX operating system.

Visualization of the various routes through a portion of the Internet.